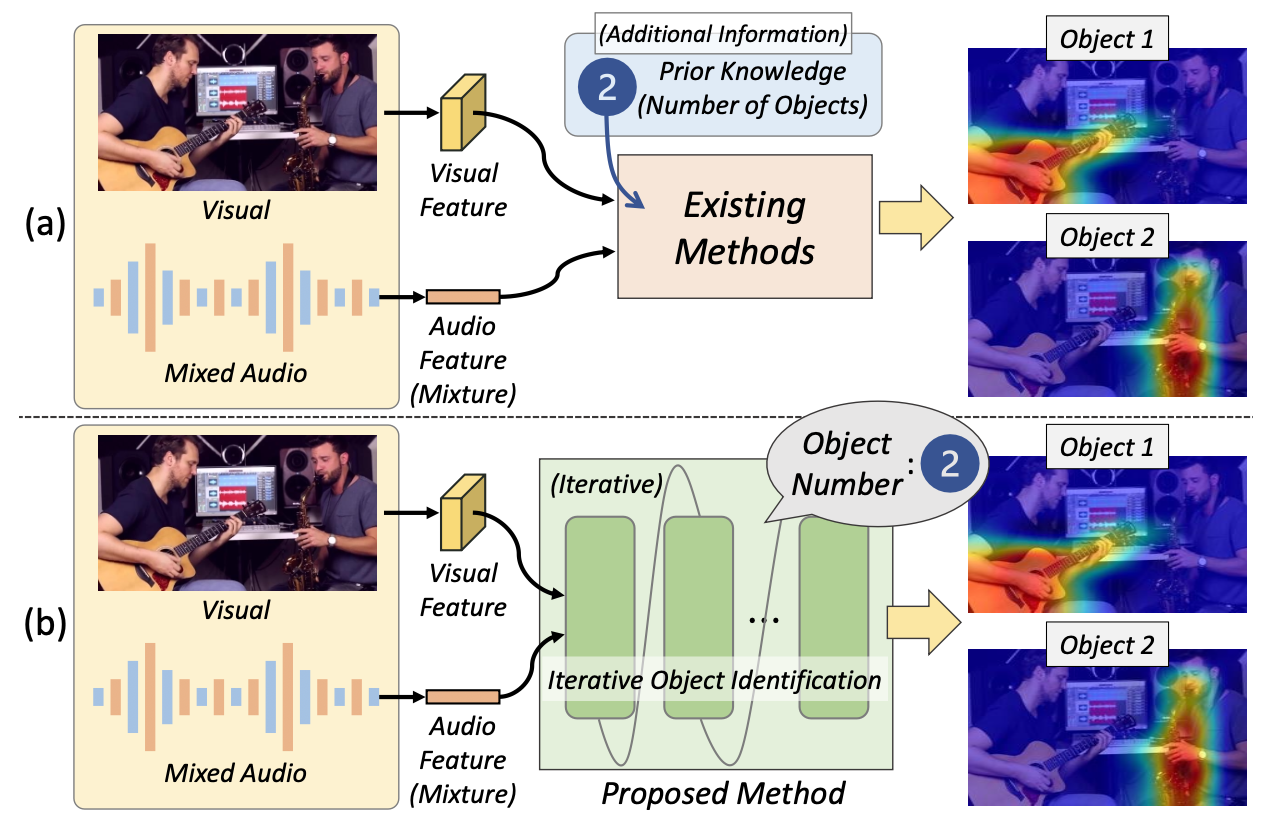

This work introduces a novel method for localizing multiple sound sources in complex mixtures without needing prior knowledge about the number of sound-emitting objects. At its core is an Iterative Object Identification module that automatically detects and refines the location of each sound-making object through repeated analysis of audio-visual cues. An Object Similarity-Aware Clustering strategy further encourages cells that belong to the same object to merge, while ensuring distinct objects remain separate.

By combining iterative recognition with effective clustering, the proposed framework excels in separating and pinpointing sources in real-world multi-sound scenarios. Empirical results on diverse benchmarks show that our approach consistently outperforms previous methods, demonstrating robust performance even under highly mixed conditions.